Notes for Prof. Hung-Yi Lee's ML Lecture: Deep Generative Model

Component by Component

Let the model to generate the product component by component.

Pixel RNN

Train an RNN to take all the preceding pixels as input and then generate the next pixel. It can be trained just with a large collection of images without any annotation.

![]()

Auto-Encoder

Usually, we don’t get good results by simply using a decoder trained by an auto-encoder and then using randomly generated code to generate products. We need some tricks.

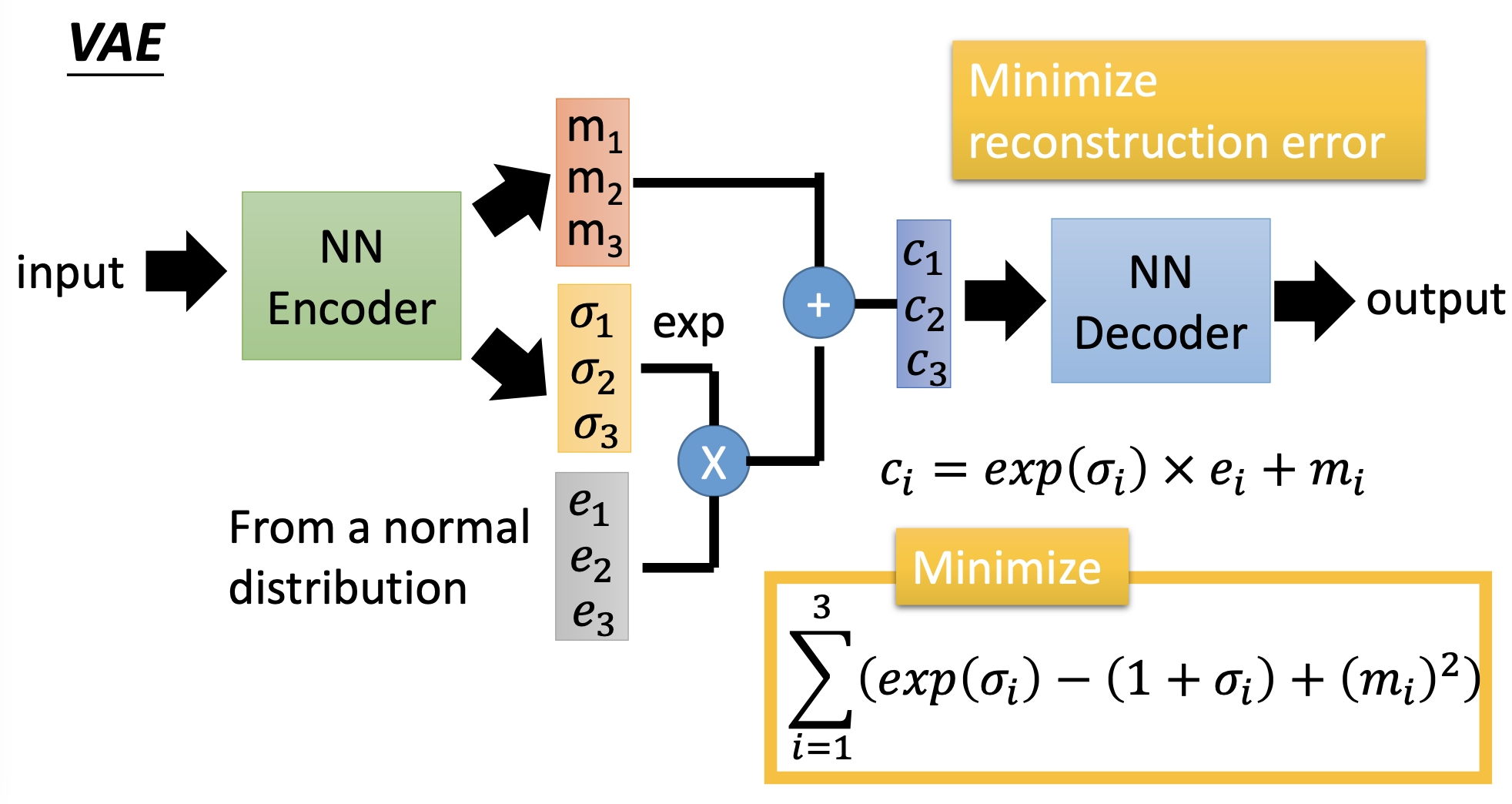

Variational Auto-Encoder

Intuitive explanation of the concept of VAE

We force the generator to re-generate the input object within a range of deviation of the code, so that when a code is near the boundary between to examples, to minimize the error, it should be like a mixture of the neighboring exmples.

Usage

We can tune 2 dimensions of the code and fix all the other dimensions to see the function of each dimension, and then control the generation of images fo the known functions of the dimensions of the codes. Take the pokemon generation as example,

Discussion

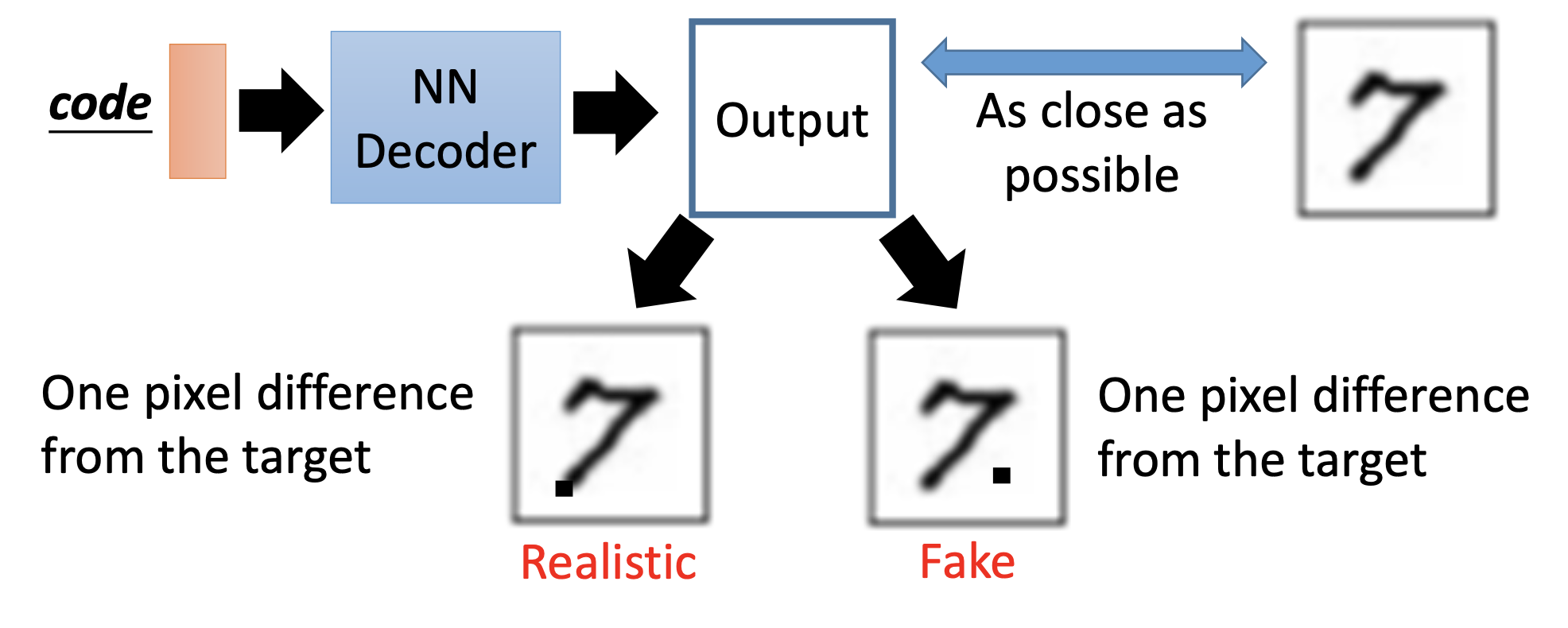

The VAE does not really try to simulate real objects; it just try to produce objects that are close to the examples. In other words, VAE may just memorize the existing examples, instead of generating new objects.

Conditional VAE

Given an example and a class, it can generate an object of the class with the style of the example. ref

Generative Adversarial Network

Iteratively train a genrator that generate an object from an input code and a discriminator that discriminate an object is a real example or generated by the generator. When Training the generatorm we cascade the discriminator after the generator, fix the weights of the discriminator, and then tune the weights to let the discriminator recognize the generated objects as real examples.

GAN is difficult to train. We have no explicit index to see whether the performance of the generator is improving. When the discriminator fails, it does not guarantee that the generator generates realistic objects. Ususally, the discriminator is just too stupid that the generator finds a specific object that can fail the discriminator.

Discussion

How to evaluate the performance is a difficult problem for using the generative models.

References

Youtube ML Lecture 17: Unsupervised Learning - Deep Generative Model (Part I)